1-800-805-5783

1-800-805-5783

Interpretability refers to the degree to which human experts can understand and explain a system’s decisions or outputs. It involves understanding a model’s internal workings. Conversely, explainability focuses on providing human-understandable justifications for a model’s predictions or decisions. It’s about communicating the reasoning behind the model’s output.

Generative AI models like intense neural networks are often labeled ‘black boxes.’ This label signifies that their decision-making processes are intricate and non-transparent, posing a significant challenge to understanding how they arrive at their outputs. This lack of openness may make adoption and trust more difficult.

Explainability is pivotal in fostering trust between humans and AI systems, a critical factor in widespread adoption. By understanding how a generative AI model reaches its conclusions, users can assess reliability, identify biases, improve model performance, and comply with regulations.

For AI to be widely used, humans and AI systems must first establish trust. Explainability is a cornerstone of faith. By understanding how a generative AI model reaches its conclusions, users can:

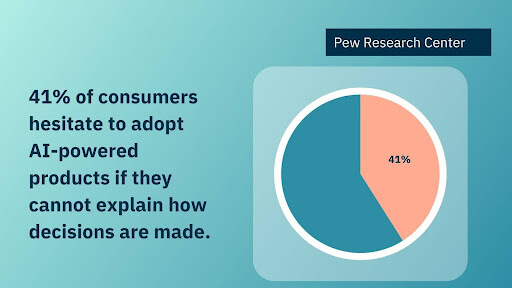

A recent study by the Pew Research Center found that 41% of consumers hesitate to adopt AI-powered products if they cannot explain how decisions are made.

Despite their impressive capabilities, generative AI models pose significant challenges to interpretability and explainability. Understanding these models’ internal mechanisms is essential for fostering trust, identifying biases, and ensuring responsible deployment.

Generative models, intense neural networks, are characterized by complex and intricate architectures. Having billions, if not millions, of parameters, these models often operate as black boxes, making it difficult to discern how inputs are transformed into outputs.

Unlike traditional machine learning tasks with clear ground truth labels, generative models often lack definitive reference points. Evaluating the quality and correctness of generated outputs can be subjective and challenging, hindering the development of interpretability in Generative AI methods.

Generative models are inherently dynamic, with their outputs constantly evolving based on random noise inputs and internal model states. This dynamic nature makes it difficult to trace the origin of specific features or attributes in the generated content, further complicating interpretability efforts.

Computer scientists, statisticians, and domain experts must collaborate to overcome these obstacles. Developing novel interpretability techniques and building trust in generative AI is critical for its responsible and widespread adoption.

Understanding the inner workings of complex generative models is crucial for building trust and ensuring reliability. Interpretability techniques provide insights into these models’ decision-making processes.

Feature importance analysis helps identify the most influential input features in determining the model’s output. This technique can be applied to understand which parts of an image or text contribute most to the generated content.

Attention mechanisms have become integral to many generative models. Visualizing attention weights can provide insights into the model’s focus during generation.

Saliency maps highlight the input regions with the most significant impact on the model’s output. By identifying these regions, we can better understand the model’s decision-making process.

Layer-wise relevance propagation (LRP) is a technique for explaining the contribution of each input feature to the model’s output by propagating relevance scores backward through the network.

Employing these interpretability techniques can help researchers and practitioners gain valuable insights into generative models’ behavior, leading to improved model design, debugging, and trust.

Explainability is crucial for understanding and trusting the decisions made by generative AI models. Various techniques have been developed to illuminate the inner workings of these complex systems.

Model-agnostic methods, including generative AI, can be applied to any machine learning model.

LIME (Local Interpretable Model-Agnostic Explanations): Approximates the complex model with a simpler, interpretable model locally around a specific data point. LIME has been widely used to explain image classification and text generation models.

SHAP (Shapley Additive exPlanations): Based on game theory, SHAP assigns importance values to features for a given prediction. It provides a global and local view of feature importance.

These techniques are tailored to specific generative model architectures.

Incorporating human feedback can enhance explainability in Generative AI and model performance.

By combining these techniques, researchers and practitioners can gain deeper insights into generative AI models, build trust, and develop more responsible AI systems.

Explainable image generation focuses on understanding the decision-making process behind generated images. This involves:

Case Study: A study by Carnegie Mellon University demonstrated that feature attribution techniques could identify the specific image regions that influenced the generation of particular object instances in a generated image.

Interpretable text generation aims to provide insights into the reasoning behind generated text. This includes:

Case Study: Researchers at Google AI developed a method to visualize the attention weights of a text generation model, revealing how the model focused on specific keywords and phrases to generate coherent and relevant text.

Explainable AI in generative models is crucial for addressing ethical concerns such as:

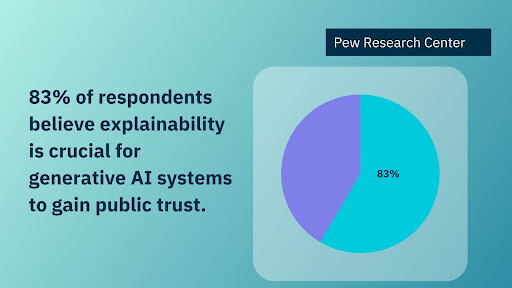

Statistic: A survey by the Pew Research Center found that 83% of respondents believe that explainability is crucial for generative AI systems to gain public trust.

By understanding the factors influencing content generation, we can develop more responsible and ethical generative AI systems.

Explainability is paramount for the responsible and ethical development of generative AI. We can build trust, identify biases, and mitigate risks by comprehending these models’ internal mechanisms. While significant strides have been made in developing techniques for explainable image and text generation, much work remains.

The intersection of interpretability and generative AI presents a complex yet promising frontier. By prioritizing explainability, we can unlock the full potential of generative models while ensuring their alignment with human values. As AI advances, the demand for explainable systems will grow stronger, necessitating ongoing research and development in this critical area.

Ultimately, the goal is to create generative AI models that are powerful but also transparent, accountable, and beneficial to society.

[x]cube has been AI-native from the beginning, and we’ve been working with various versions of AI tech for over a decade. For example, we’ve been working with Bert and GPT’s developer interface even before the public release of ChatGPT.

One of our initiatives has significantly improved the OCR scan rate for a complex extraction project. We’ve also been using Gen AI for projects ranging from object recognition to prediction improvement and chat-based interfaces.

Interested in transforming your business with generative AI? Talk to our experts over a FREE consultation today!