1-800-805-5783

1-800-805-5783

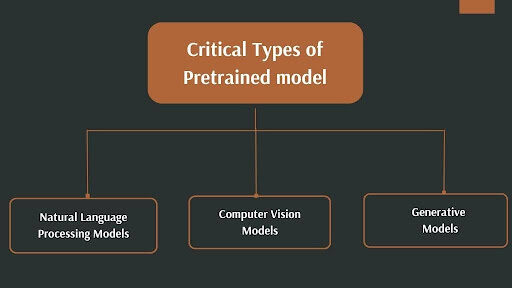

Pre-trained Models are AI models trained on massive datasets to perform general tasks. Think of them as well-educated individuals with a broad knowledge base. Rather than starting from scratch for each new task, developers can leverage these pre-trained models as a foundation, significantly accelerating development time and improving performance.

The popularity of Pre-trained Models has exploded in recent years due to several factors:

By utilizing Pre-trained Models, businesses can:

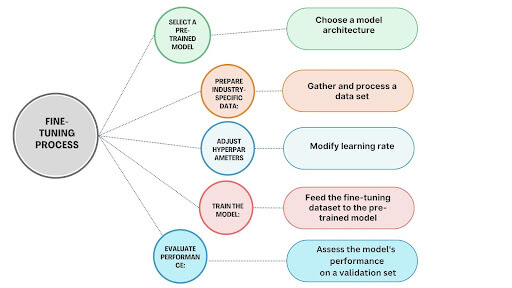

Fine-tuning is adapting a Pre-trained Model to a specific task or domain. It involves adjusting the model’s parameters using a smaller, domain-specific dataset. This technique allows for tailoring the general knowledge of the Pre-trained Model to the nuances of a particular application. However, what is the main problem with foundation pre-trained models? It lies in their generality, which might not capture the specific intricacies of specialized tasks or domains, thus necessitating fine-tuning.

In the following sections, we will explore the intricacies of pre-trained models and how fine-tuning can be applied to various industries.

Pre-trained multitask Generative AI models are AI systems trained on massive datasets to perform various tasks. Think of them as highly educated individuals with a broad knowledge base. These models are the backbone of many modern AI applications, providing a robust foundation for solving complex problems.

For instance, a language model might be trained on billions of words from books, articles, and code. This exposure equips the model with a deep understanding of grammar, syntax, and even nuances of human language. Similarly, an image recognition model might be trained on millions of images, learning to identify objects, scenes, and emotions within pictures.

The real magic of pre-trained models lies in their ability to transfer knowledge to new tasks. This process, known as transfer learning, significantly reduces the time and resources required to build industry-specific AI solutions.

Instead of training a model from scratch, developers can fine-tune a pre-trained model on their specific data, achieving impressive results with minimal effort.

For example, a pre-trained language model can be fine-tuned to analyze financial news articles, identify potential risks, or generate investment recommendations. Similarly, a pre-trained image recognition model can be adapted to detect defects in manufacturing products or analyze medical images for disease diagnosis.

By leveraging the power of pre-trained models, organizations can accelerate their AI initiatives, reduce costs, and achieve better performance.

Fine-tuning is taking a pre-trained model, which has learned general patterns from massive datasets, and tailoring it to excel at a specific task or within a particular industry. It’s like taking a skilled athlete and specializing them in a specific sport.

Why Fine-Tune?

Fine-tuning offers several compelling advantages:

By following these steps and leveraging the power of fine-tuning, organizations can unlock the full potential of pre-trained models and gain a competitive edge in their respective industries.

Finance: Fine-tuning language models for financial news analysis and fraud detection.

Healthcare: Fine-tuning image recognition models for medical image analysis and drug discovery.

Manufacturing: Fine-tuning machine learning models for predictive maintenance and anomaly detection.

Industry: Customer Service

Challenge: Traditional customer service systems often need help to handle complex queries and provide accurate, timely responses.

Solution: A leading telecommunications company fine-tuned a pre-trained language model on a massive dataset of customer interactions, support tickets, and product manuals. The resulting model significantly enhanced the company’s chatbot capabilities, enabling it to understand customer inquiries more accurately, provide relevant solutions, and even resolve issues without human intervention.

Industry: Pharmaceuticals

Challenge: The drug discovery process is time-consuming and expensive, with a high failure rate.

Solution: A pharmaceutical company leveraged a pre-trained image recognition model to analyze vast biological image data, such as protein structures and molecular interactions. By fine-tuning the model on specific drug targets, researchers could identify potential drug candidates more efficiently.

Industry: Supply Chain Management

Challenge: Supply chain disruptions and inefficiencies can lead to significant financial losses and customer dissatisfaction.

Solution: To improve demand forecasting and inventory management, a global retailer fine-tuned a pre-trained time series model on historical sales data, inventory levels, and economic indicators. The model accurately predicted sales trends, enabling the company to optimize stock levels and reduce out-of-stock situations.

Fine-tuning pre-trained models has emerged as a powerful strategy to accelerate AI adoption across industries. By leveraging the knowledge embedded in these foundational models and tailoring them to specific tasks, organizations can significantly improve efficiency, accuracy, and time to market.

The applications are vast and promising, from enhancing customer service experiences to revolutionizing drug discovery and optimizing supply chains.

Advancements in transfer learning, meta-learning, and efficient fine-tuning techniques continually expand the possibilities of what can be achieved with pre-trained models. As these technologies mature, we can anticipate even more sophisticated and specialized AI applications emerging across various sectors.

The future of Generative AI is undeniably tied to the effective utilization of pre-trained models. By incorporating fine-tuning as a fundamental element of their AI plans, businesses could obtain a competitive advantage in the continuously changing digital landscape and put themselves at the forefront of innovation.

1. What is the difference between training a model from scratch and fine-tuning a pre-trained model?

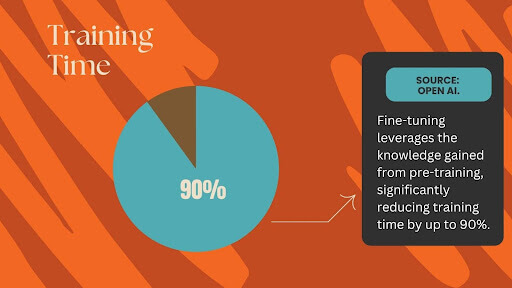

Training a model from scratch involves starting with random weights and learning all parameters from a given dataset. On the other hand, fine-tuning leverages the knowledge gained from a pre-trained model on a massive dataset and adapts it to a specific task using a smaller, domain-specific dataset.

2. What are the key factors when selecting a pre-trained model for fine-tuning?

The choice of a pre-trained model depends on factors such as the task at hand, the size of the available dataset, computational resources, and the desired level of performance. When selecting, consider the model’s architecture, pre-training data, and performance metrics.

3. How much data is typically required for effective fine-tuning?

The amount of data needed for fine-tuning varies depending on the task’s complexity and the size of the pre-trained model. Generally, a smaller dataset is sufficient compared to training from scratch. However, high-quality and relevant data is crucial for optimal results.

4. What are the common challenges faced during fine-tuning?

Finding high-quality training data, preventing overfitting, and optimizing hyperparameters are challenges. Additionally, computational resources and time constraints can be significant hurdles.

5. What are the potential benefits of fine-tuning pre-trained models?

Fine-tuning offers several advantages, including faster training times, improved performance on specific tasks, reduced computational costs, and the ability to leverage knowledge from massive datasets.

[x]cube has been AI-native from the beginning, and we’ve been working with various versions of AI tech for over a decade. For example, we’ve been working with Bert and GPT’s developer interface even before the public release of ChatGPT.

One of our initiatives has significantly improved the OCR scan rate for a complex extraction project. We’ve also been using Gen AI for projects ranging from object recognition to prediction improvement and chat-based interfaces.

Interested in transforming your business with generative AI? Talk to our experts over a FREE consultation today!